In 1976, John O'Keefe and colleagues discovered place cells in the hippocampus—neurons that fire when an animal occupies a specific location. This finding introduced the idea of a biological spatial map. Later studies revealed that these cells are not only tuned to physical position but also modulated by contextual and multi-sensory cues. Remarkably, the same population of place cells can produce different spatial maps in different environments and return to previous maps upon revisiting familiar ones—even after long delays or intervening learning. These properties suggest that the brain constructs spatial memory through multi-modal sensory integration and supports robust continual learning without catastrophic forgetting.

But how do such structured, flexible representations emerge? Can similar principles be observed in artificial networks trained on realistic experience streams? What kinds of learning objectives or inductive biases support these phenomena?

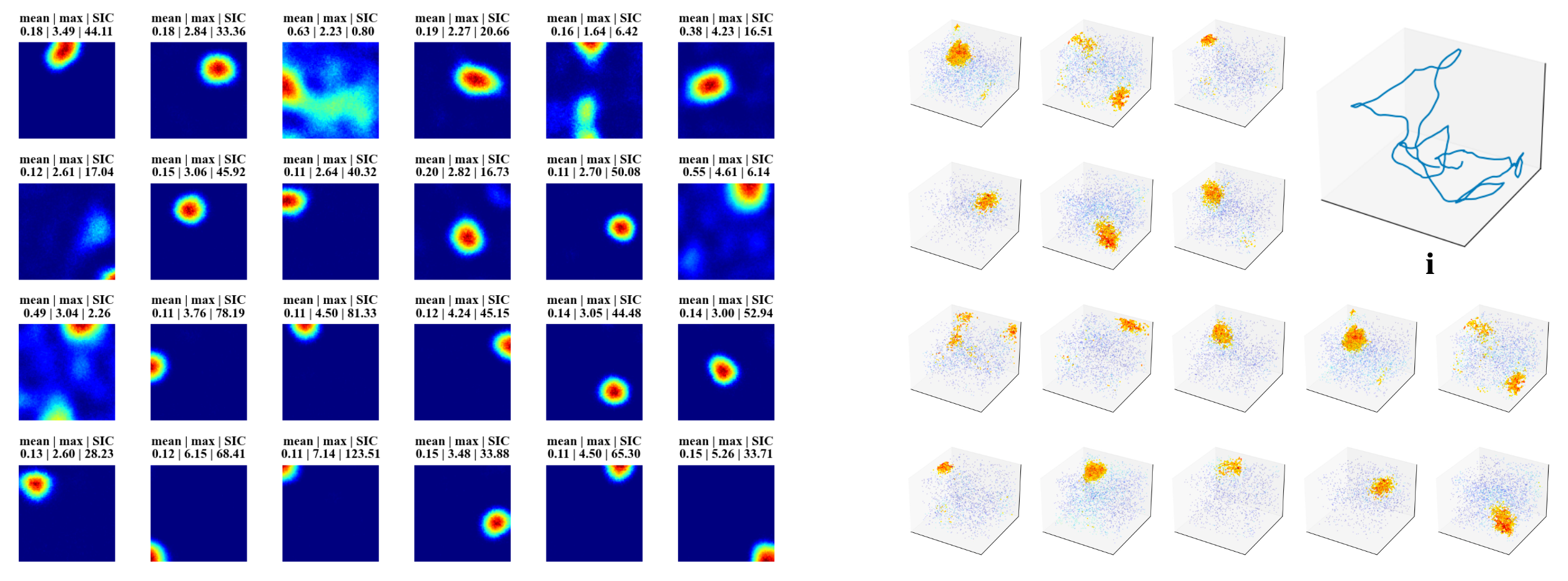

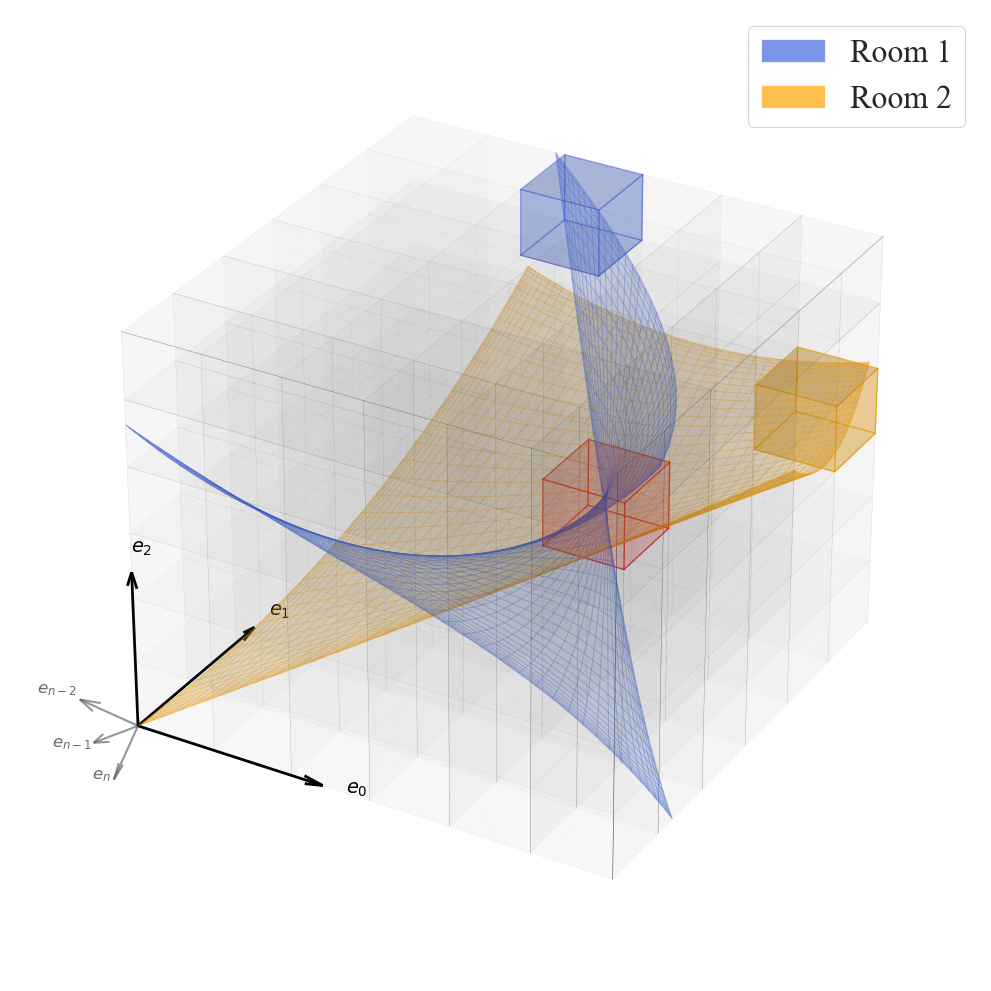

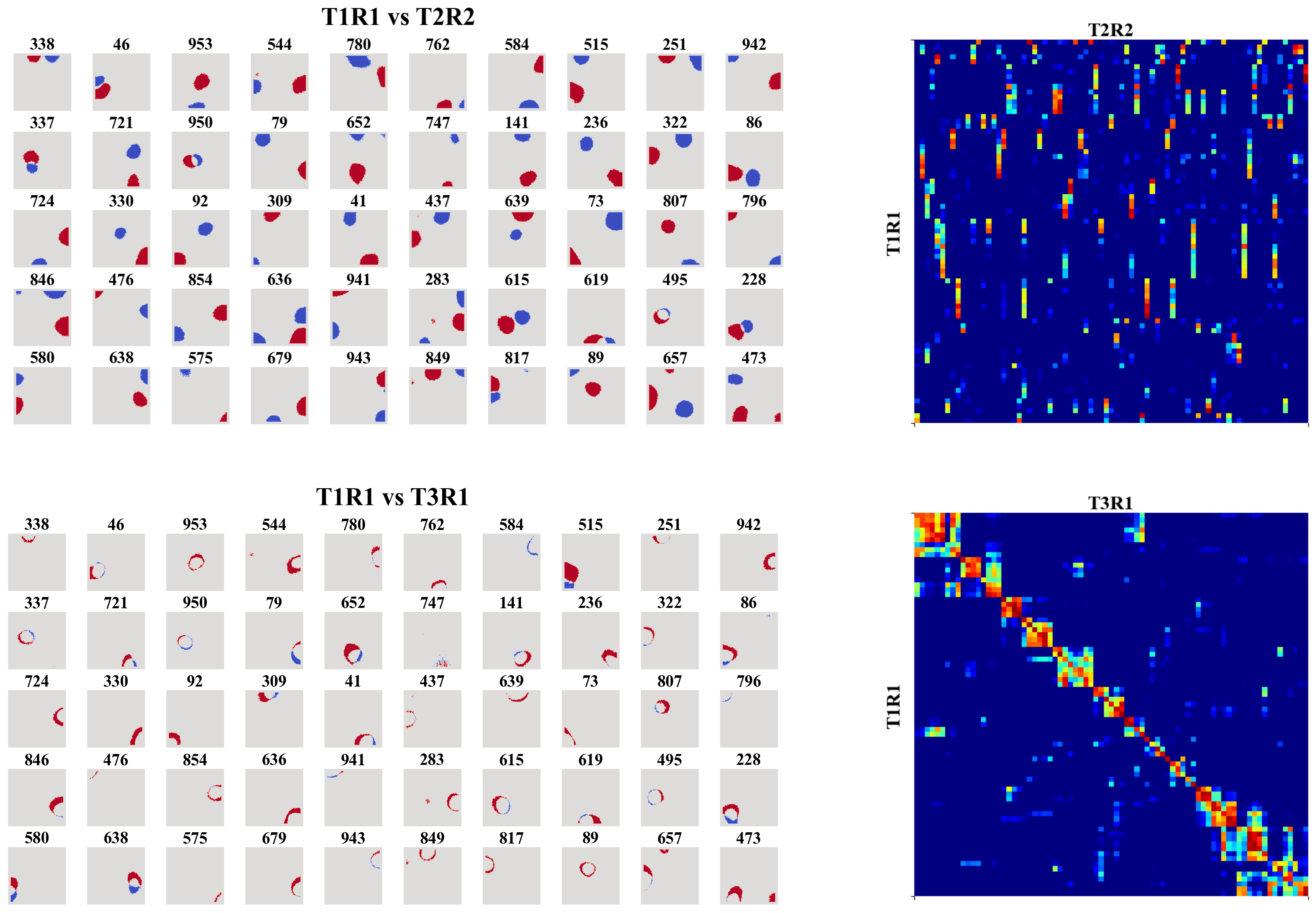

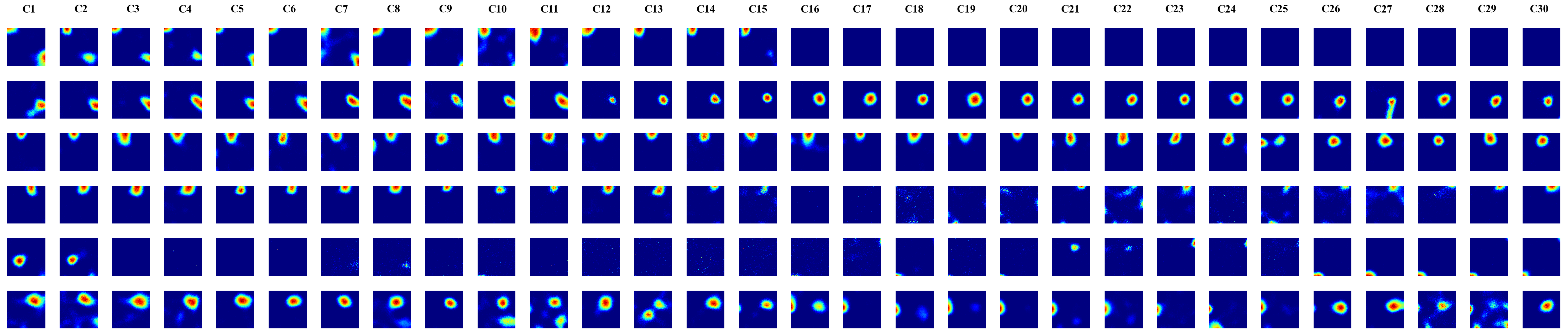

In this work, we trained a recurrent autoencoder to reconstruct high-dimensional, temporally continuous sensory signals collected by an agent traversing simulated environments. Without any explicit spatial supervision, the network developed internal representations strongly reminiscent of biological place cells: units fired in localized regions, remapped across different environments, and reverted upon returning to familiar spaces. These representations were stable over time, formed orthogonal spatial maps to prevent interference, and adapted gradually—mimicking representational drift of Cornu Ammonis area (CA3) place cells. Our results suggest that spatial coding can arise naturally as a side effect of reconstructive memory over structured sensory streams, offering insights for continual representation learning in artificial agents.

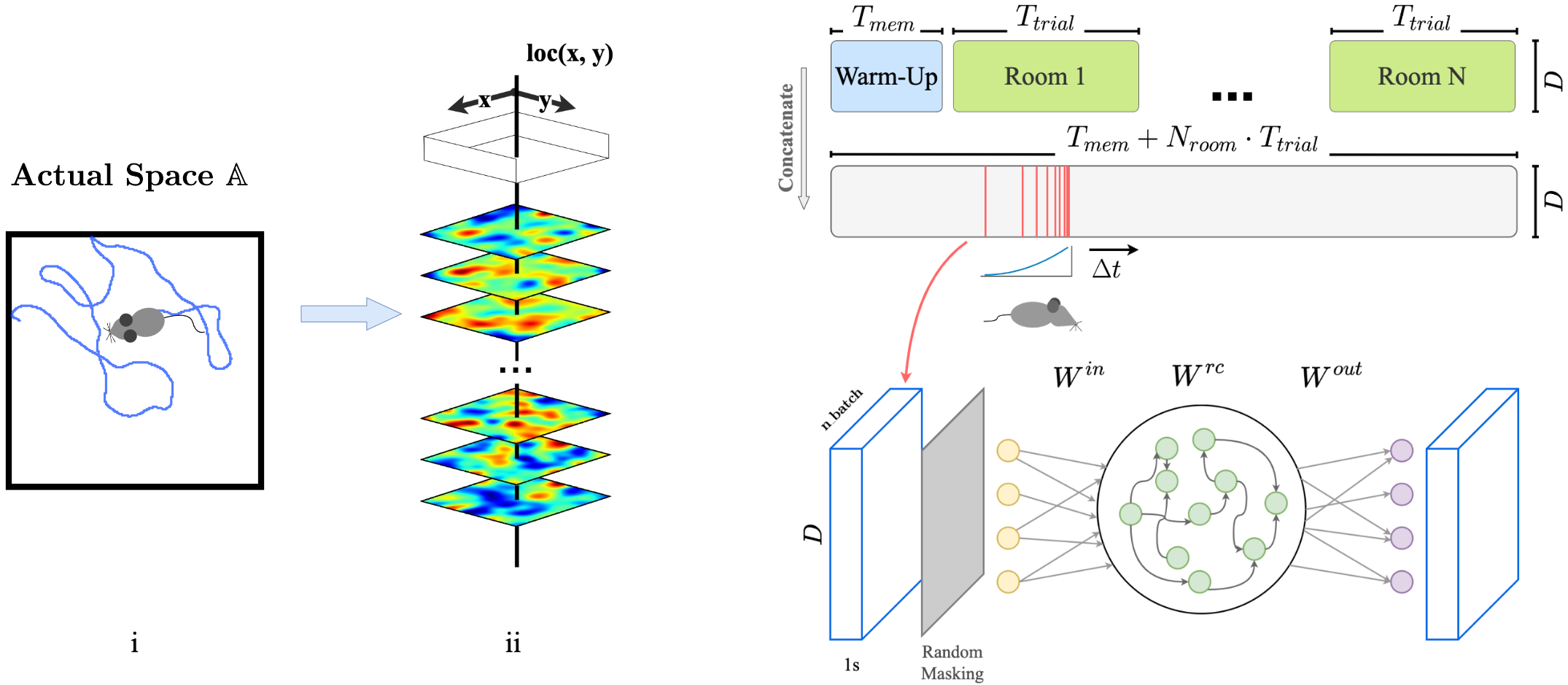

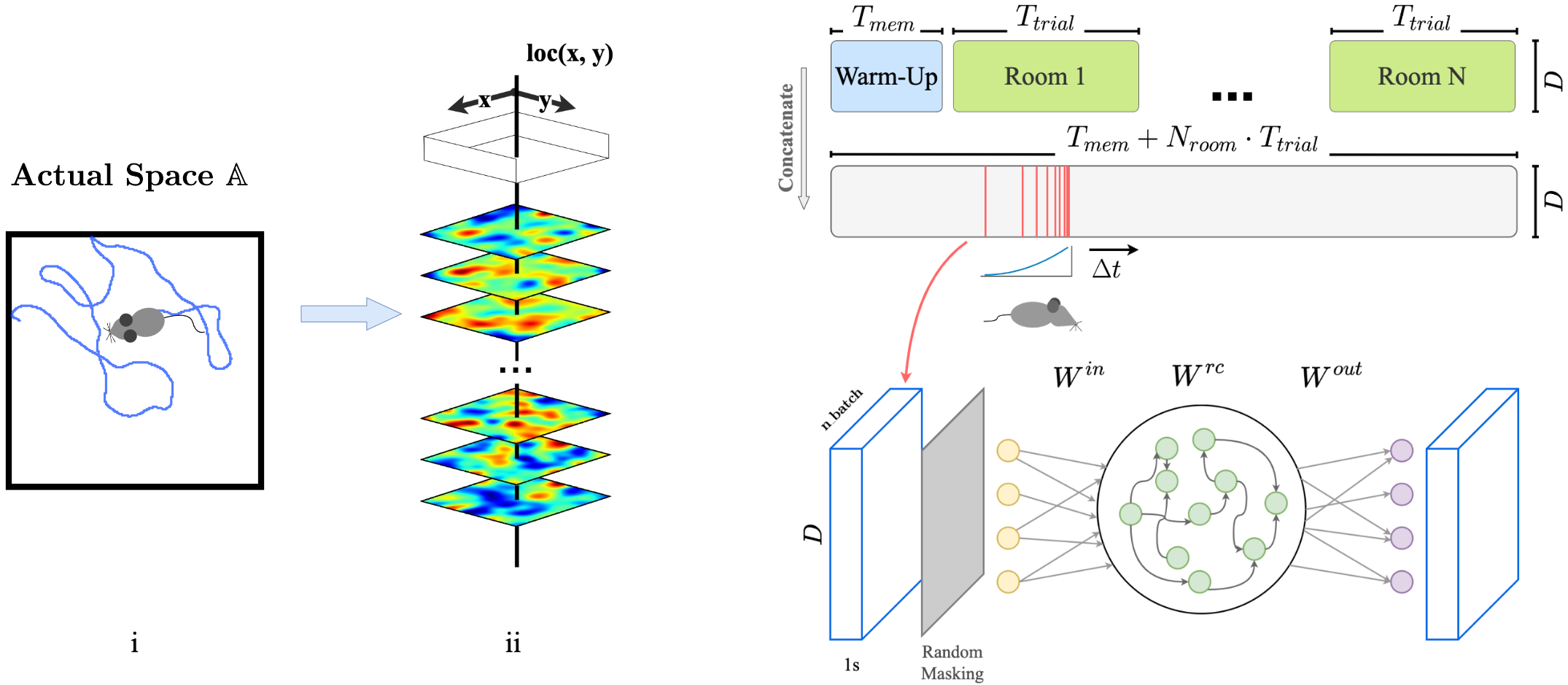

Setup

We frame the encoding of space as the task of auto-associating sensory signals (potentially from different modalities) collected during an agent's random spatial traversal. In biological systems, this kind of mechanism may improve the reliability of localization and mapping, especially in dynamic environments where subsets of sensory inputs can vary over time—making any single input insufficient on its own.

To model this process, we use a masked autoencoding objective: a recurrent neural network (RNN) is trained to reconstruct missing parts of the sensory input as the agent explores a 2D environment.

To model this process, we use a masked autoencoding objective: a recurrent neural network (RNN) is trained to reconstruct missing parts of the sensory input as the agent explores a 2D environment.