Trading Place for Space: Increasing Location Resolution Reduces Contextual Capacity in Hippocampal Codes

Spencer Rooke, Zhaoze Wang, Ronald W. DiTullio, and Vijay Balasubramanian

In NeurIPS 2024 (Oral), 2024

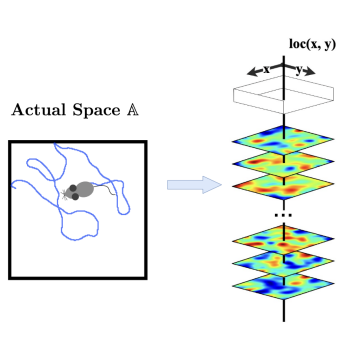

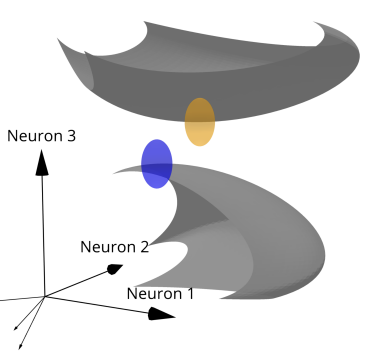

Researchers have long theorized that animals are capable learning cognitive maps of their environment - a simultaneous representation of context, experience, and position. Since their discovery in the early 1970’s, place cells have been assumed to be the neural substrate of these maps. Individual place cells explicitly encode position and appear to encode experience and context through global, population level firing properties. Context, in particular, appears to be encoded through remapping, a process in which subpopulations of place cells change their tuning in response to changes in sensory cues. While many studies have looked at the physiological basis of remapping, the field still lacks explicit calculations of contextual capacity as a function of place field firing properties. Here, we tackle such calculations. First, we construct a geometric approach for understanding population level activity of place cells, assembled from known firing field statistics. We treat different contexts as low dimensional structures embedded in the high dimensional space of firing rates and the distance between these structures as reflective of discriminability between the underlying contexts. Accordingly, we investigate how changes to place cell firing properties effect the distances between representations of different environments within this rate space. Using this approach, we find that the number of contexts storable by the hippocampus grows exponentially with the number of place cells, and calculate this exponent for environments of different sizes. We further identify a fundamental tradeoff between high resolution encoding of position and the number of storable contexts. This tradeoff is tuned by place cell width, which might explain the change in firing field scale along the ventral dorsal axis of the Hippocampus. Finally, we demonstrate that clustering of place cells near likely points of confusion, such as boundaries, increases the contextual capacity of the place system within our framework.

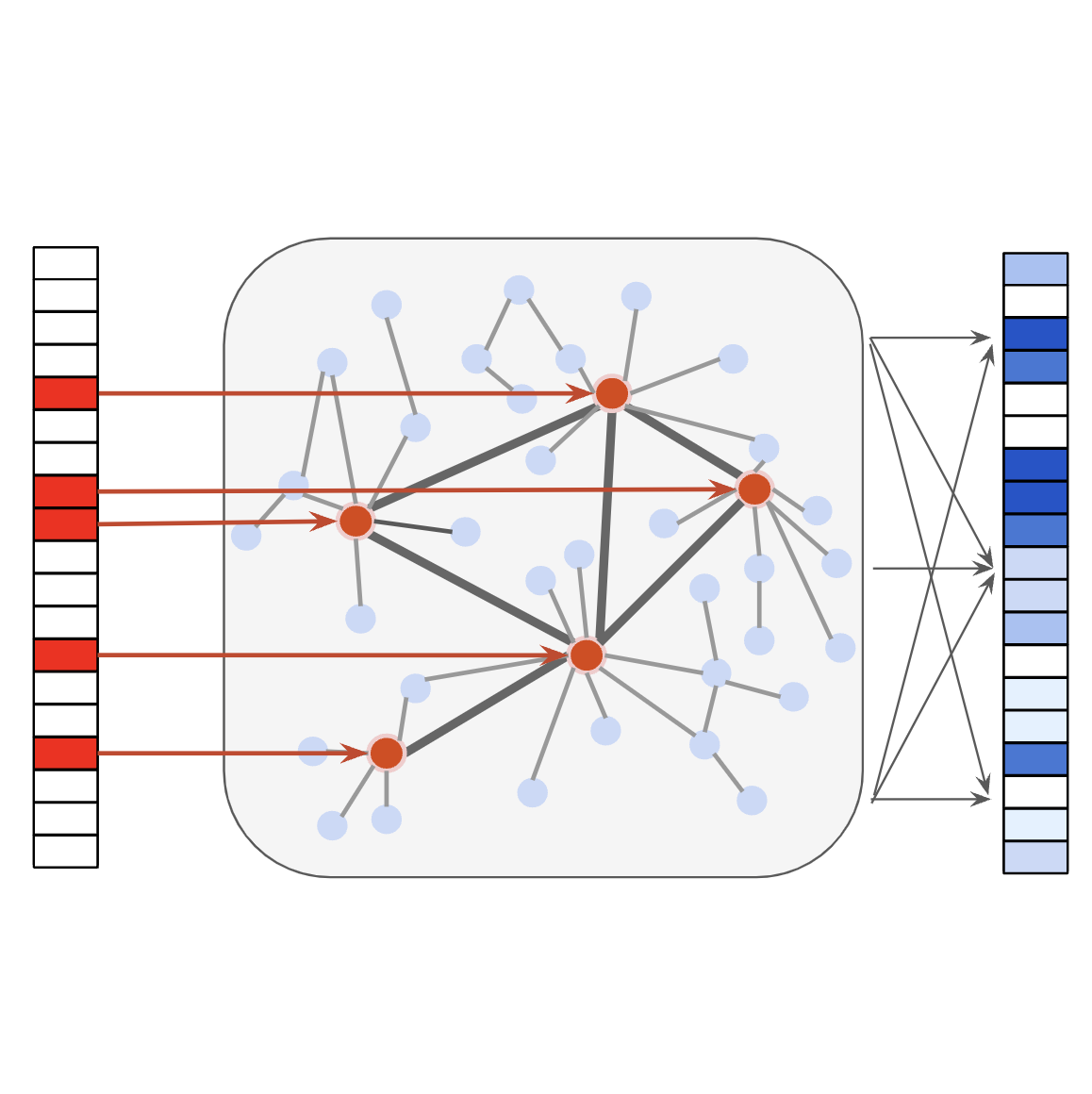

A Versatile Hub Model For Efficient Information Propagation And Feature Selection2023

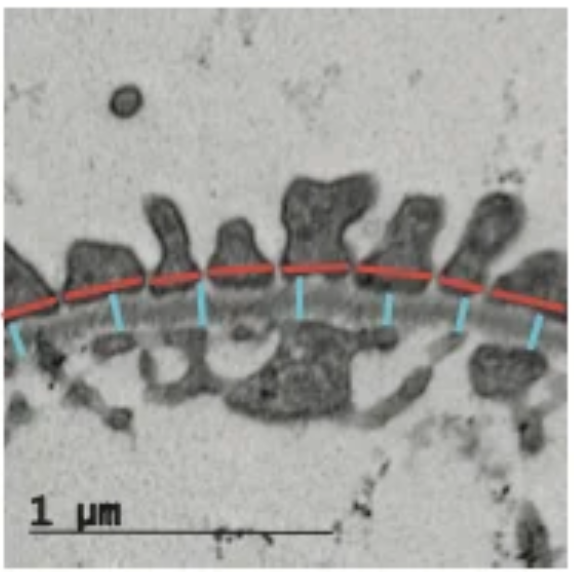

A Versatile Hub Model For Efficient Information Propagation And Feature Selection2023 Computational Assessment of Glomerular Basement Membrane Width and Podocyte Foot Process Width in an Animal Model of PodocytopathyJournal of the American Society of Nephrology, 2022

Computational Assessment of Glomerular Basement Membrane Width and Podocyte Foot Process Width in an Animal Model of PodocytopathyJournal of the American Society of Nephrology, 2022